AI Dev & Researcher

Some of these projects were completed as a freelance consultant for companies, while others are personal research initiatives. Only the most relevant projects are listed here.

LLMs for Smart Contract Vulnerability Detection

As the first ML hire at a blockchain security startup, I led the development of a multi-stage LLM-based system to detect, explain, and fix vulnerabilities in smart contracts. Starting with fine-tuned open-source models and later transitioning to OpenAI's APIs, we built a pipeline with over 20 models covering different stages of vulnerability analysis. My responsibilities spanned:

- Designing and maintaining the ML architecture

- Developing models for detection, explanation, patch generation, and verification

- Optimizing the verification stage, which reduced false positives from 50% to less than 10%

- Managing end-to-end ML workflows: data collection, preprocessing, training, and evaluation

- Contributing to team growth, mentoring junior ML engineers, and aligning with product and security audit teams

- Helped scale the company from 3 to 10 people during my time.

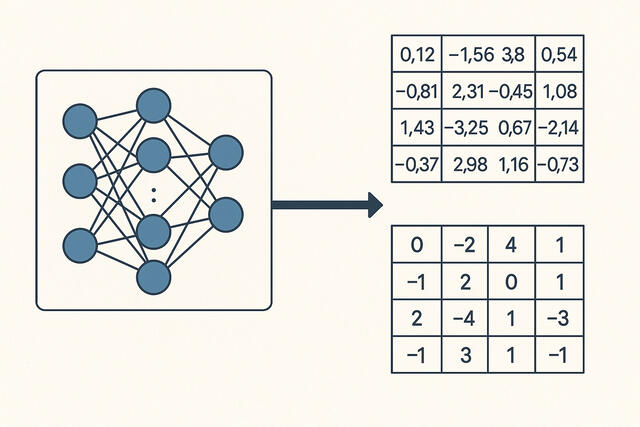

Embedded Quantized Models

Worked on two edge AI projects focused on deploying quantized deep learning models for real-time applications:

- Developed a lightweight face embedding model optimized for person identification on resource-constrained devices

- Built an image classification system to detect adversarial or manipulated images for platform security

- Collaborated with a 5-person team of researchers and engineers, optimizing models for size, speed, and accuracy under strict hardware limitations.

Generative AI for Personalized Face Image Creation and Modification

Worked with startups to develop generative AI systems focused on creating and modifying human face images using minimal input data and constrained hardware environments:

- Personalized Image Generation: Built a pipeline to generate realistic images of individuals from just a few example photos. Developed an automated preprocessing algorithm to filter low-quality inputs, followed by rapid LoRA-based finetuning of Stable Diffusion models—completing the entire personalization process in under one minute. Delivered a Dockerized prototype for integration into a production web platform.

- Face Attribute Editing: Led the training of models to modify specific facial features (e.g., hairstyle changes). Due to limited GPU resources, focused on lightweight GAN architectures and experimented with multiple model variations to balance realism and performance. Conducted over 6 months of iterative R&D to meet quality and runtime requirements.

Stable Diffusion

This project provides an educational implementation of diffusion models, designed specifically to be trained from scratch on a single NVIDIA RTX 4090 GPU. Built using PyTorch and containerized with Docker for ease of setup and reproducibility, it serves as a clean, modular starting point for exploring the inner workings of diffusion-based generative models.

Focused on clarity and learning rather than production-ready deployment, this implementation is ideal for researchers, students, and developers who want to study diffusion training dynamics and experiment with architecture variations in a powerful but manageable GPU environment.

HashNeRF

This project is a PyTorch-based implementation of the Hash NeRF architecture, inspired by the research in neural radiance fields that utilize multi-resolution hash encoding for fast and memory-efficient 3D scene representation. Designed to reproduce and explore the core ideas from the corresponding paper, this implementation provides a clean and modular codebase suitable for both educational and experimental use.

The entire workflow — including data preprocessing, model training, and rendering — is containerized using Docker for ease of deployment and environment consistency. This makes it easy to get started on any system with minimal setup.

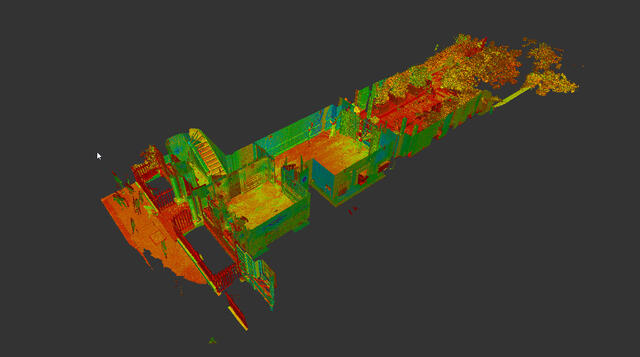

3D Point Cloud Instance Segmentation

Developed a prototype for instance segmentation of large-scale 3D point clouds generated by laser scanning of indoor environments. The system classified points into semantic objects (e.g., walls, furniture) and grouped them into instances. While the method worked well in smaller scenes, scaling was limited by the client's GPU constraints.

3D Virtual World Generation from Text

Joined a 5-month R&D effort to train a reinforcement learning agent capable of generating 3D virtual worlds using Houdini. The agent learned to operate Houdini's GUI directly—clicking buttons and navigating menus—to construct scenes based on text prompts. Worked alongside 6 engineers on RL training, Houdini automation, and reward modeling.

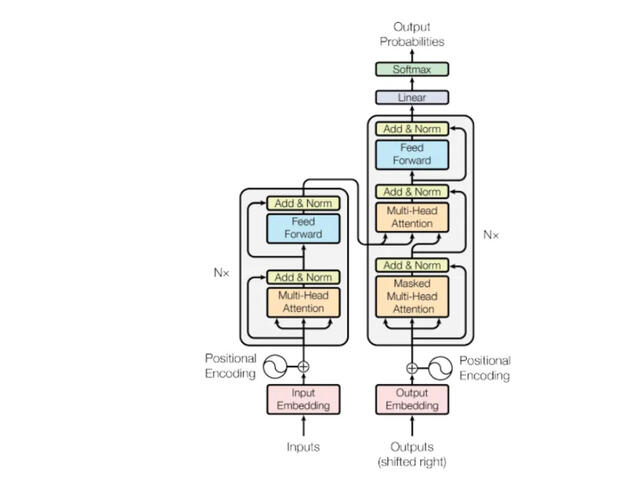

LLM transformer

This project implements a transformer-based code completion system with a primary focus on the Kotlin programming language, while remaining flexible enough to be trained on other programming languages. Designed for educational and experimental purposes, the model is optimized to train and run efficiently on a single NVIDIA RTX 4090 GPU.

Built using PyTorch and packaged in Docker, the system supports end-to-end workflows, from dataset preparation and tokenization to model training and inference. The codebase is modular, making it easy to adapt the model to other languages, integrate with IDE plugins, or experiment with different architectures (e.g., GPT-style decoders or encoder-decoder models).

Want to get in touch?

I'm open to collaborations, project proposals, or just a chat about AI.

Contact me